One language, any hardware.

Pythonic syntax.

Systems-level performance.

Mojo unifies high-level AI development with low-level systems programming. Write once, deploy everywhere - from CPUs to GPUs - without vendor lock-in.

Efficient element-wise addition of two tensors

Mojo function callable directly from Python

A device-targeted vector addition kernel

Why we built Mojo?

Vendor lock-in is expensive

You're forced to choose: NVIDIA's CUDA, AMD's ROCm, or Intel's oneAPI. Rewrite everything when you switch vendors. Your code becomes a hostage to hardware politics.

The two-language tax

Prototype in Python. Rewrite in C++ for production. Debug across language boundaries. Your team splits into 'researchers' and 'engineers' - neither can work on the full stack.

Python hits a wall

Python is 1000x too slow for production AI. The GIL blocks true parallelism. Can't access GPUs directly. Every optimization means dropping into C extensions. Simplicity becomes a liability at scale.

Toolchain chaos

PyTorch for training. TensorRT for inference. vLLM for serving. Each tool has its own bugs, limitations, and learning curve. Integration nightmares multiply with every component.

Memory bugs in production

C++ gives you footguns by default. Race conditions in parallel code. Memory leaks that OOM your servers. Segfaults in production at 3 AM.

Developer experience ignored

30-minute build times. Cryptic template errors. Debuggers that can't inspect GPU state. Profilers that lie about performance. Modern developers deserve tools that accelerate, not frustrate.

Why should I use Mojo??

Easier

GPU Programming Made?Easy

Traditionally, writing custom GPU code means diving into CUDA, managing memory, and compiling separate device code. Mojo simplifies the whole experience while unlocking top-tier performance on NVIDIA and AMD GPUs.

GPU-specific coordinates for MMA tile processing

PERFORMANT

Bare metal performance on any GPU

Get raw GPU performance without complex toolchains. Mojo makes it easy to write high-performance kernels with intuitive syntax, zero boilerplate, and native support for NVIDIA, AMD, and more.

Using low level warp GPU instructions ergonomically

InteroperabLE

Use Mojo to extend python

Mojo interoperates natively with Python so you can speed up bottlenecks without rewriting everything. Start with one function, scale as needed—Mojo fits into your codebase

Call a Mojo function from Python

Community

Build with us in the open to create the future of AI

Mojo has more than ?750K+ lines of open-source code with an active community of 50K+ members. We're actively working to open even more to build a transparent, developer-first foundation for the future of AI infrastructure.

750k

MOJO + MAX

Write GPU Kernels with MAX

Traditionally, writing custom GPU code means diving into CUDA, managing memory, and compiling separate device code. Mojo simplifies the whole experience while unlocking top-tier performance on NVIDIA and AMD GPUs.

Define a custom GPU subtraction kernel

Production ready

Powering Breakthroughs in?Production AI

Top AI teams use Mojo to turn ideas into optimized, low-level GPU code. From Inworld’s custom logic to Qwerky’s memory-efficient Mamba, Mojo delivers where performance meets creativity.

Modern tooling

World-Class Tools, Out of the?Box

Mojo ships with a great VSCode debugger and works with dev tools like Cursor and Claude. Mojo makes modern dev workflows feel seamless.

Mojo extension in VSCode

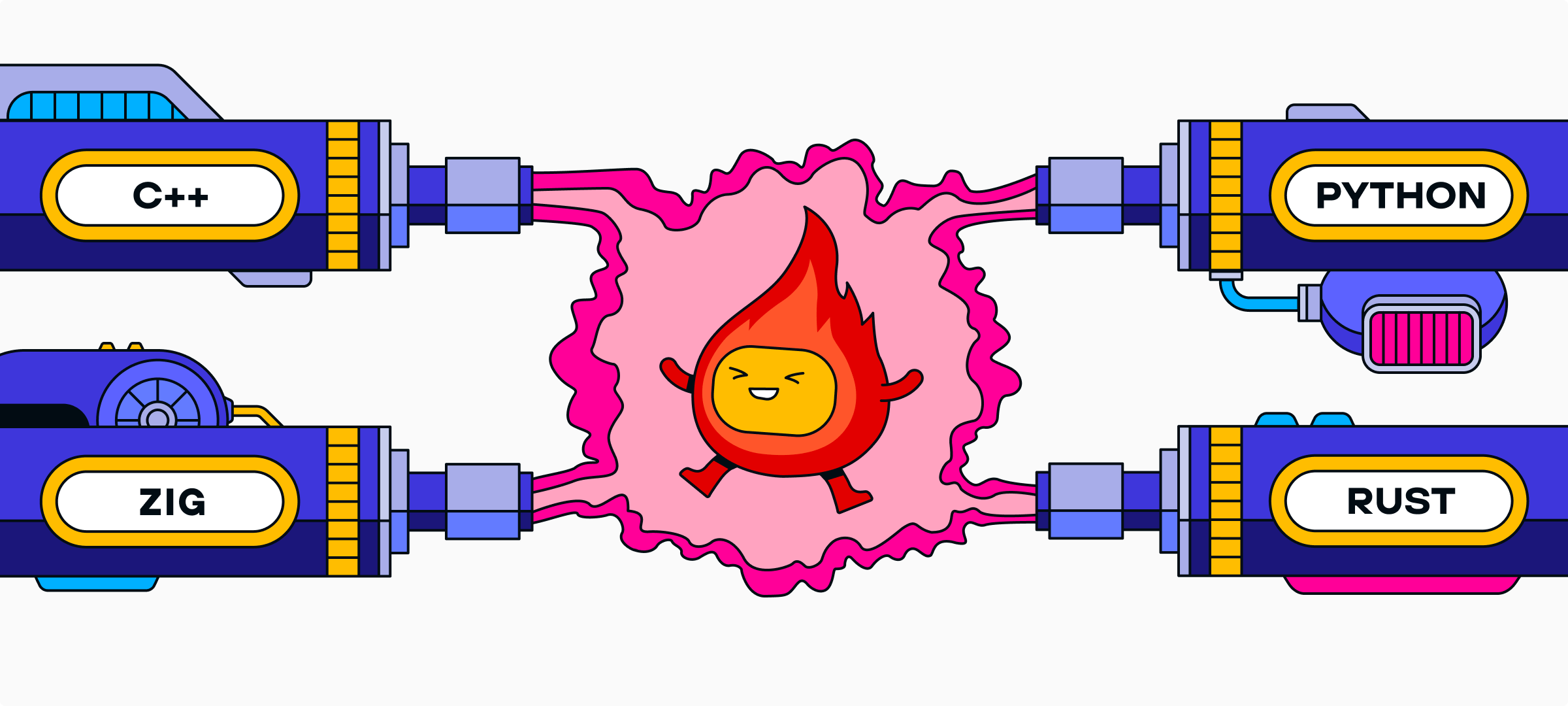

Mojo ??learns from

What Mojo? keeps from C++

Zero cost abstractions

Metaprogramming power

Turing complete: can build a compiler in templates

Low level hardware control

Inline asm, intrinsics, zero dependencies

Unified host/device language

What Mojo? improves about C++

Slow compile times

Template error messages

Limited metaprogramming

...and that templates != normal code

Not MLIR-native

What Mojo? keeps from Python

Minimal boilerplate

Easy-to-read syntax

Interoperability with the massive Python ecosystem

What Mojo? improves about Python

Performance

Memory usage

Device portability

What Mojo? keeps from Rust

Memory safety through borrow checker

Systems language performance

What Mojo improves about Rust

More flexible ownership semantics

Easier to learn

More readable syntax

What Mojo? keeps from Zig

Compile-time metaprogramming

Systems language performance

What Mojo? improves about Zig

Memory safety

More readable syntax

“Mojo has Python feel, systems speed. Clean syntax, blazing performance.”

Explore the world of high-performance computing through an illustrated comic. A fresh, fun take—whether you're new or experienced.

Get started with Mojo?

Mojo Manual

Learn how to write a simple program that performs vector addition on a GPU, exploring fundamental concepts of GPU programming.

GPU Puzzles

A hands-on guide to mastering GPU programming ?using Mojo’s powerful abstractions and performance capabilities.

Python Interoperability

Because Mojo uses a Pythonic syntax, its easy to start reading and writing Mojo when coming from Python

Popular Mojo? Tech Talks

Developer Approved

"after wrestling with CUDA drivers for years, it felt surprisingly… smooth. No, really: for once I wasn’t battling obscure libstdc++ errors at midnight or re-compiling kernels to coax out speed. Instead, I got a peek at writing almost-Pythonic code that compiles down to something that actually flies on the GPU."

"This is about unlocking freedom for devs like me, no more vendor traps or rewrites, just pure iteration power. As someone working on challenging ML problems, this is a big thing."

“The more I benchmark, the more impressed I am with the MAX Engine.”

“I tried MAX builds last night, impressive indeed. I couldn't believe what I was seeing... performance is insane.”

“It’s fast which is awesome. And it’s easy. It’s not CUDA programming...easy to optimize.”

“A few weeks ago, I started learning Mojo ???and MAX. Mojo has the potential to take over AI development. It's Python++. Simple to learn, and extremely fast.”

“Max installation on Mac M2 and running llama3 in (q6_k and q4_k) was a breeze! Thank you Modular team!”

"Mojo is Python++. It will be, when complete, a strict superset of the Python language. But it also has additional functionality so we can write high performance code that takes advantage of modern accelerators."

“Tired of the two language problem. I have one foot in the ML world and one foot in the geospatial world, and both struggle with the 'two-language' problem. Having Mojo - as one language all the way through would be awesome.”

“Mojo can replace the C programs too. It works across the stack. It’s not glue code. It’s the whole ecosystem.”

“What @modular is doing with Mojo and the MaxPlatform is a completely different ballgame.”

“I am focusing my time to help advance @Modular. I may be starting from scratch but I feel it’s what I need to do to contribute to #AI for the next generation.”

“Mojo and the MAX Graph API are the surest bet for longterm multi-arch future-substrate NN compilation”

“A few weeks ago, I started learning Mojo ???and MAX. Mojo has the potential to take over AI development. It's Python++. Simple to learn, and extremely fast.”

“Mojo destroys Python in speed. 12x faster without even trying. The future is bright!”

"Mojo gives me the feeling of superpowers. I did not expect it to outperform a well-known solution like llama.cpp."

“I'm very excited to see this coming together and what it represents, not just for MAX, but my hope for what it could also mean for the broader ecosystem that mojo could interact with.”

"It worked like a charm, with impressive speed. Now my version is about twice as fast as Julia's (7 ms vs. 12 ms for a 10 million vector; 7 ms on the playground. I guess on my computer, it might be even faster). Amazing."

“I'm excited, you're excited, everyone is excited to see what's new in Mojo and MAX and the amazing achievements of the team at Modular.”

“The Community is incredible and so supportive. It’s awesome to be part of.”

“I'm very excited to see this coming together and what it represents, not just for MAX, but my hope for what it could also mean for the broader ecosystem that mojo could interact with.”

“I'm excited, you're excited, everyone is excited to see what's new in Mojo and MAX and the amazing achievements of the team at Modular.”

“Tired of the two language problem. I have one foot in the ML world and one foot in the geospatial world, and both struggle with the 'two-language' problem. Having Mojo - as one language all the way through is be awesome.”

"C is known for being as fast as assembly, but when we implemented the same logic on Mojo and used some of the out-of-the-box features, it showed a huge increase in performance... It was amazing."

Mojo destroys Python in speed. 12x faster without even trying. The future is bright!

Get started with?Mojo

Quick start resources

Get started guide

With just a few commands, you can install MAX as a conda package and deploy a GenAI model on a local endpoint.

Browse open source models

500+ supported models, most of which have been optimized for lightning fast speed on the Modular platform.

Find examples

Follow step by step recipes to build Agents, chatbots, and more with MAX.